The Differences Between Data Testing, Data Monitoring, and Data Observability: What’s Right for You?

Introduction

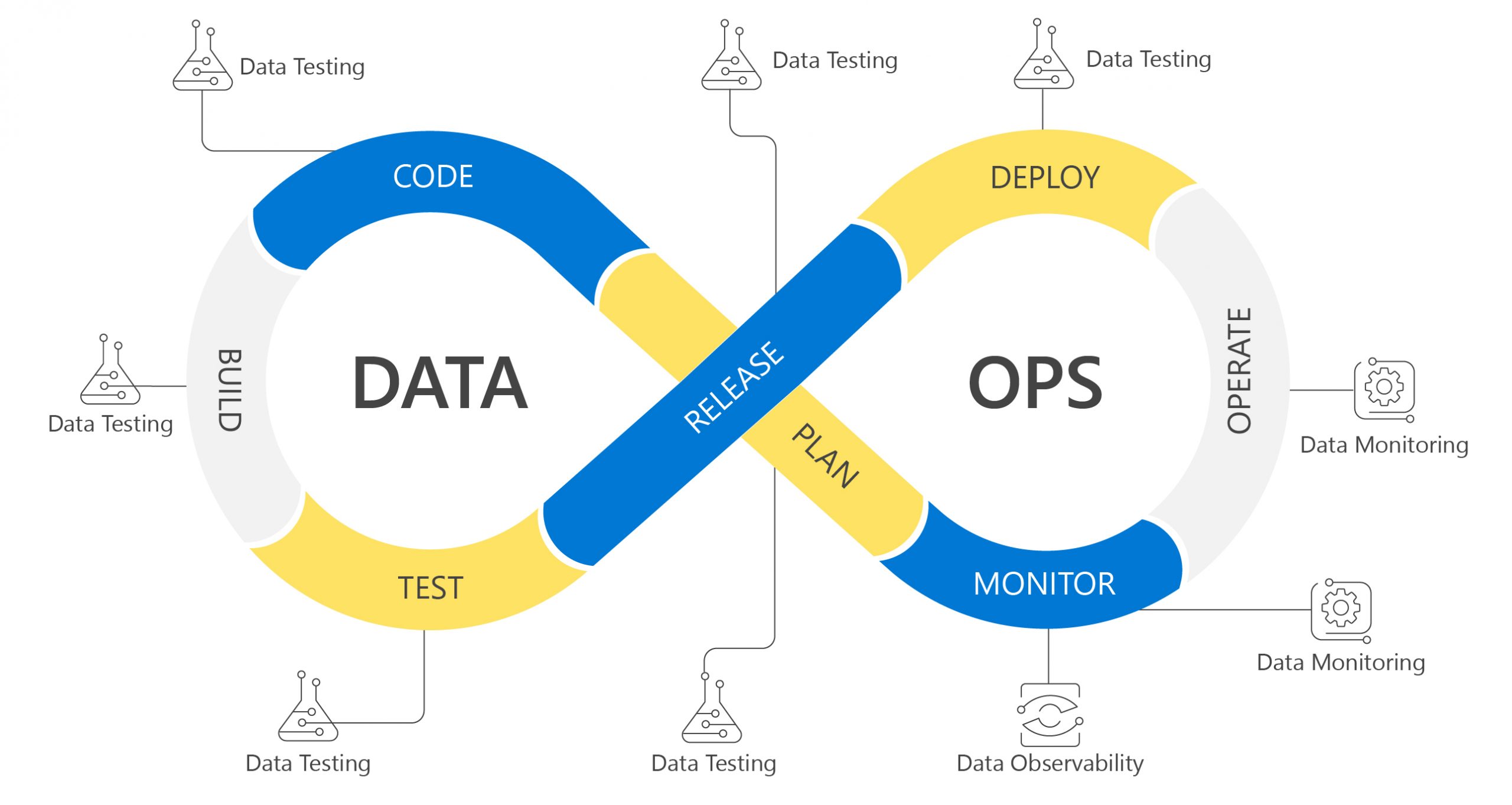

Organizations have realized that achieving reliable data is more than just measuring data quality dimensions. It requires us to adopt a more comprehensive engineering approach that includes the three pillars: data testing, data monitoring, and data observability.

While these terms are often used interchangeably, they represent distinct concepts with unique purposes, methodologies, and outcomes. However, they also complement each other. You cannot achieve trusted data while ignoring any one of them. To achieve 99.99% data reliability, organizations are adopting a unified approach that integrates data testing, monitoring, and observability, all available within iceDQ’s Data Reliability Platform.

Throughout this article, I’ll examine these three essential data quality pillars through a structured comparative lens. Each approach will be evaluated based on five key dimensions:

- Timing (when it occurs in the data lifecycle)

- Scope (what aspects of data it addresses)

- Methodology (how it functions)

- Ownership (who typically manages it)

- Outcomes (what business value it delivers)

This framework will help clarify not only what makes testing, monitoring, and observability distinct, but also how they complement each other to form a comprehensive data reliability strategy. By understanding these distinctions, you’ll be equipped to implement the right approaches at the right time for your organization’s specific needs.

Understanding the Differences with a Real-World Example

Let’s illustrate how these three pillars work together with a practical example. Imagine your team is tasked with migrating an on-premises database to a Snowflake data warehouse:

1. Data Testing Phase

a. Data Migration Testing

You need to migrate existing data to the cloud first. Someone on your team must test and certify that the migrated data is exactly the same as the source data. This requires data migration testing and reconciliation between source and cloud environments.

During this phase, you will:

- Compare record counts between source and target.

- Verify data type conversions are accurate.

- Validate that business keys maintain referential integrity.

- Confirm calculated values match between systems.

- Ensure historical data maintains its time-based characteristics.

b. ETL Pipeline Testing

You’ll need to develop new ETL processes or rewrite old ones to populate data into the new Snowflake database. These new ETL processes cannot be deployed to production without thorough ETL pipeline testing.

This testing would include:

- Validating transformation logic produces expected outputs.

- Testing with various data scenarios, including edge cases.

- Verifying error handling works correctly.

- Confirming that incremental loads function as intended.

- Ensuring performance meets required SLAs.

2. Data Monitoring in Production

Once the system is in production, there are many potential points of failure. Data monitoring ensures you catch issues immediately, stop the process if necessary, and notify when:

a. Input Data Monitoring

- Monitor incoming files for a valid format, completeness, and timeliness.

- Validate that source systems are providing expected data volumes.

- Check and ensure that file headers and structure matches requirements.

b. Process Monitoring

- Track ETL job execution, status, and duration.

- Monitor resource utilization during processing.

- Verify that the transformations successfully complete.

c. Data Reconciliation Monitoring

- Ensure that processed data is valid for business use.

- Check for business rule violations, such as shipments without corresponding orders.

- Verify that aggregate values match expected totals.

3. Data Observability for Pattern Detection

Even with testing and monitoring in place, some issues are only detectable through observability practices.

For example:

- Identifying unusual patterns like sudden drops in order volumes.

- Detecting gradual data drift that might indicate changing customer behavior.

- Spotting performance degradation across multiple systems.

- Identifying correlations between seemingly unrelated data points.

Observability uses machine learning and statistical methods to detect patterns that might not be obvious through rules-based approaches. While not always 100% definitive, it excels at uncovering subtle anomalies that warrant investigation.

Key Difference Table

| Item | Data Testing | Data Monitoring | Data Observability |

| Definition | Data testing is a quality assurance process that verifies data processes and data to ensure they meet quality requirements. | Data monitoring is about proactively creating checks and rules to understand the state of data and data pipelines. | Data observability is the property of the system that provides insights into its current state. |

| Use Cases | Data Migration Testing, Data Warehouse Testing, ETL Data Pipeline Testing, BI Report Testing, Database Testing. | Monitor Files, Data Pipelines, Data Reconciliation, and Data Integrity. Implement Compliance for BCBS-239, FINRA, PII/PCI, Solvency II, CCPA, GDPR. | Schema, Data, Spend, Usage, Data Drift, Infrastructure, Schema Drift. |

| Outcomes / Goals | Prevent faulty data processes from entering production. Test to ensure that data processes and orchestration are developed correctly. | Stop bad data from entering production data pipelines. Ensure there are no runtime issues in the data pipeline execution or orchestration. | Ensure any anomalies in the data are detected before consumption. Observability is the last step to detect and catch any issues that might have occurred despite earlier precautions. |

| Ownership | Developers, QA Team, Release Managers, UAT Team. | Operations, Compliance. | Business Users, Data Governance, Data Quality. |

| Data Granularity | From aggregate to granular data (Tables/Files, Records, Columns). | Both granular and aggregate data. | Aggregate, Numeric Only, Probabilistic, AI/ML Needs, Historical Data. |

| Timing /Environment | Non-production Environments: Development, QA, System Integration, UAT. | Production Environments. | Production Environments. |

| Inputs | Data. | Data. | Data and logs. |

| Methodology | Regex, pattern detection, and rules. Testing typically involves threshold-based verification. | Regex, pattern detection, rules. Monitoring typically involves threshold-based alerting. | Historical time series, trend analysis, and anomalies detection. Observability employs advanced techniques like correlation and anomaly detection. |

| AI | No. It is not possible as there is no historical data to learn from. | Limited. Due to complex business rules. | Yes. Because it is based on patterns and anomaly detection. |

| Defect Detection | Deterministic: Binary detection, based on rules. | Deterministic: Binary detection, based on rules. | Fuzzy, based on heuristic trial and error or loosely defined rules. |

| Integrations | Test Case Management Suites, CICD. | Issue Management System. | Issue Management System. |

| Example | Verifying that a transformation correctly calculates customer lifetime value. | Receipt validation based on number of records vs predefined expected value. | Detecting sudden changes in file size or record count. |

Decision Framework: When to Use Each Approach

Choosing the right approach depends on several factors:

Use Data Testing When:

- Implementing new data processes.

- Making changes to existing pipelines.

- Migrating data between systems.

- Validating business logic implementation.

- Preparing for regulatory audits.

Use Data Monitoring When:

- Operating critical data pipelines.

- Tracking real-time process orchestration.

- Validating data against defined SLAs.

- Ensuring compliance requirements.

- Maintaining operational reliability.

- Performing data reconciliation based on business rules.

Use Data Observability When:

- Analyzing system-wide behavior.

- Detecting emerging patterns.

- Investigating root causes.

- Optimizing resource utilization.

- Making strategic, data-driven decisions.

The most effective data quality strategies implement all three approaches with clear handoffs between them.

Conclusion

As we have seen from the comparison, data testing, monitoring, and observability each offer distinct value, but they complement one another in nature.

Data testing is primarily used in the development phase, while monitoring and observability operate in the production phase. While data testing serves the QA purpose, data monitoring helps operations teams track their processes, and data observability is best suited for identifying data quality issues and anomalies.

Based on these differences, I encourage you to assess your current data quality strategy, identify any missing components, and incorporate them into a comprehensive approach.

Additional Resources

- Read more about Data Testing concepts.

- Learn more about Data Monitoring concepts.

- Understand Data Observability concepts.

- Read our Data Reliability Engineering thesis.

Share this article