Data Warehouse vs Data Lake vs Data Lakehouse: Understanding Modern Data Architecture

A Complete Guide to Choosing the Right Data Platform for Your Enterprise

Table of Contents

- Data Warehouses: Separating Operations from

- Data Lakes: Embracing the Data Deluge

- Data Lakehouse: The Best of Both Worlds

- Data warehouse vs Data Lake vs Data Lake House

- The Hidden Challenge: Data Quality Across Any Architecture

- How iceDQ Validates & Reconcile your Data

- iceDQ Capabilities for Modern Data Platforms

- Business Impact: From Data Quality to Business Value

- Conclusion

- Ready to Ensure Data Quality Across Your Data Platform?

Table of Contents

- Data Warehouses: Separating Operations from

- Data Lakes: Embracing the Data Deluge

- Data Lakehouse: The Best of Both Worlds

- Data warehouse vs Data Lake vs Data Lake House

- The Hidden Challenge: Data Quality Across Any Architecture

- How iceDQ Validates & Reconcile your Data

- iceDQ Capabilities for Modern Data Platforms

- Business Impact: From Data Quality to Business Value

- Conclusion

- Ready to Ensure Data Quality Across Your Data Platform?

Key Takeaway: The evolution from data warehouses to data lakes to data lakehouses represents decades of learning about enterprise data management. Each architecture solves specific problems—but introduces new challenges around data quality, governance, and reliability that require continuous validation.

The progression from OLTP systems through data warehouses and data lakes to contemporary data lakehouses reflects decades of accumulated expertise in managing, transforming, and extracting value from enterprise data.

Yet with each architectural advancement comes a critical challenge: ensuring data integrity as information flows through increasingly complex pipelines. This article explores why enterprises have adopted each successive architecture.

Data Warehouses: Separating Operations from Analytics

Pioneers like Bill Inmon and Ralph Kimball introduced data warehouses to separate transactional from analytical workloads. Data is extracted from multiple OLTP databases and integrated into a warehouse with models suited for large data and queries. While effective for structured data, data warehouses struggle with semi-structured and unstructured formats. Attempts to add blob storage were largely unsuccessful.

- Schema rigidity

- Upfront modeling doesn’t suit semi- or unstructured data

- Proprietary warehouse storage costs can rise rapidly at scale

Data Lakes: Embracing the Data Deluge

The explosion of big data-clickstreams, IoT sensors, social media feeds, log files-overwhelmed traditional data warehouses led to the emergence of Data lakes. It offered a fundamentally different philosophy: store everything first, figure out how to use it later.

Built on distributed file systems like Hadoop HDFS and later cloud object storage (S3, Azure Blob, GCS), data lakes embrace schema-on-read. Raw data lands in its native format such as JSON, Parquet, Avro, CSV, images, and video without upfront transformation. Data lakes use schema-on-read, but often face issues:

- Lack of governance led to “data swamps.”

- No ACID transactions made updates unreliable.

- Query performance lagged behind warehouses.

- Data quality problems increased.

Data Lakehouse: The Best of Both Worlds

The term “Data Lakehouse” was first used by Pentaho co-founder James Dixon and later formalized in Databricks’ 2020 blog post.

Rather than running separate systems for data science and BI, organizations can now use a single platform that stores data in open formats on low-cost object storage while delivering performance, reliability, and governance.

Technologies like Delta Lake, Apache Iceberg, and Apache Hudi make this possible by adding ACID transactions, schema enforcement, and governance to scalable data lakes.

Examples of data lakehouse:

- Databricks Lakehouse Platform + Delta Lake

- Snowflake Unistore + Iceberg

- AWS + Apache Iceberg

Data warehouse vs Data Lake vs Data Lake House

| Feature | Data Warehouse | Data Lake | Data Lakehouse |

| Term Origin | Concept developed by Bill Inmon (1990s) and Ralph Kimball with dimensional modeling | Term coined by James Dixon, CTO of Pentaho, in 2010 | Architecture popularized by Databricks (2020); enabled by Delta Lake, Iceberg, Hudi table formats |

| Definition | A structured data management system storing current and historical data for business intelligence and reporting | A centralized repository storing raw data in all formats-structured, semi-structured, and unstructured-at any scale | A modern architecture combining the flexibility of data lakes with the ACID reliability, governance, and performance of data warehouses |

| Primary Purpose | BI reporting, dashboards, analytics, and historical trend analysis for business decision-making | Store raw data at scale for machine learning, advanced analytics, data science exploration, and streaming ingestion | Unified platform supporting BI, ML, streaming, and large-scale analytics without data duplication across systems |

| Data Types Supported | Primarily structured data only (tables, rows, columns in relational format) | All types: structured, semi-structured (JSON, XML), and unstructured (images, video, logs, documents) | All data types with added governance, schema management, and structured query support across all formats |

| Schema Handling | Schema-on-Write: Data must be modeled and structured before loading into the warehouse | Schema-on-Read: Flexible approach where structure is applied when data is queried, not when stored | Flexible: Schema enforcement with evolution support via Delta Lake, Iceberg, or Hudi; schema-on-read where needed |

| ACID Transactions | Full ACID support for reliable updates, deletes, and concurrent access | Limited or no ACID support; updates and deletes are difficult | Full ACID via Delta Lake, Iceberg, or Hudi table formats |

| Governance & Reliability | High governance: consistent, integrated, subject-oriented; ACID transactions; non-volatile with mature security | Low governance: risk of becoming a "data swamp" without strict management; limited ACID support | High governance: full ACID transactions, time travel, indexing, fine-grained access control, unified data catalog |

| Query Performance | Excellent: optimized for fast analytical queries (OLAP) with indexing, caching, and query optimization | Variable: performance depends on file formats and partitioning; not optimized for BI workloads | High performance: SQL + ML optimizations via Z-ordering, caching, and unified architecture |

| Cost Model | Higher cost: proprietary storage formats, compute + storage coupling, vendor pricing at scale | Low cost: commodity cloud object storage (S3, ADLS, GCS) with pay-per-use pricing | Low cost: open formats on cloud object storage with warehouse-like capabilities; compute/storage separated |

| Streaming Support | Limited: primarily batch-oriented ETL architecture; real-time requires additional tooling | Good: native streaming support with Kafka, Spark Streaming, Flink for real-time ingestion | Unified: batch and streaming on same tables; real-time analytics with consistent data |

| Data Quality Control | Enforced at load time; constraints, data types, validation rules | Minimal; quality issues often discovered late in process | Flexible; schema enforcement + expectations/constraints at each layer |

| Time Travel / Versioning | Limited: some platforms offer point-in-time recovery; varies by vendor | None natively: requires manual versioning, file copies, or custom solutions | Built-in: query historical versions, rollback changes, audit trail, data recovery |

| Vendor Lock-in | High: proprietary formats, expensive and complex migration between platforms | Low: open file formats (Parquet, ORC, Avro) on standard object storage | Low: open table formats (Delta, Iceberg, Hudi) on open storage; interoperable |

| Primary Users | Business analysts, BI developers, report consumers, finance teams | Data scientists, ML engineers, data engineers, research teams | All users: analysts, scientists, engineers on a single unified platform |

| Common Technologies | Snowflake, Google BigQuery, Amazon Redshift, Azure Synapse, Teradata, Oracle | AWS S3, Azure Data Lake Storage, Google Cloud Storage, Hadoop HDFS | Databricks Lakehouse, Delta Lake, Apache Iceberg, Apache Hudi, Dremio |

| Best For | Dashboards, financial reporting, regulatory compliance, SQL-centric teams, mature BI needs | ML/data science exploration, raw data preservation, large-scale ingestion, cost-sensitive storage | Enterprises needing one platform for BI + ML without data duplication; modern unified analytics |

| Example Platforms | Snowflake, Teradata, Oracle, SQL Server, Redshift, BigQuery | Hadoop HDFS, AWS S3, Azure Data Lake, Google Cloud Storage | Databricks, Delta Lake, Apache Iceberg, Apache Hudi, Dremio |

The Hidden Challenge: Data Quality Across Any Architecture

Whether you choose a data warehouse, data lake, or data lakehouse setup, maintaining data integrity throughout the pipeline remains a significant challenge.

Common Data Quality Failures

- Schema drift: Source system changes that aren’t detected until data corruption occurs downstream

- Incomplete extraction: Records missing due to query timeouts, API pagination errors, or network failures

- Transformation errors: Incorrect business rule implementation causing wrong calculations, filtering or aggregations.

- Report discrepancies: BI dashboards showing different numbers than source systems

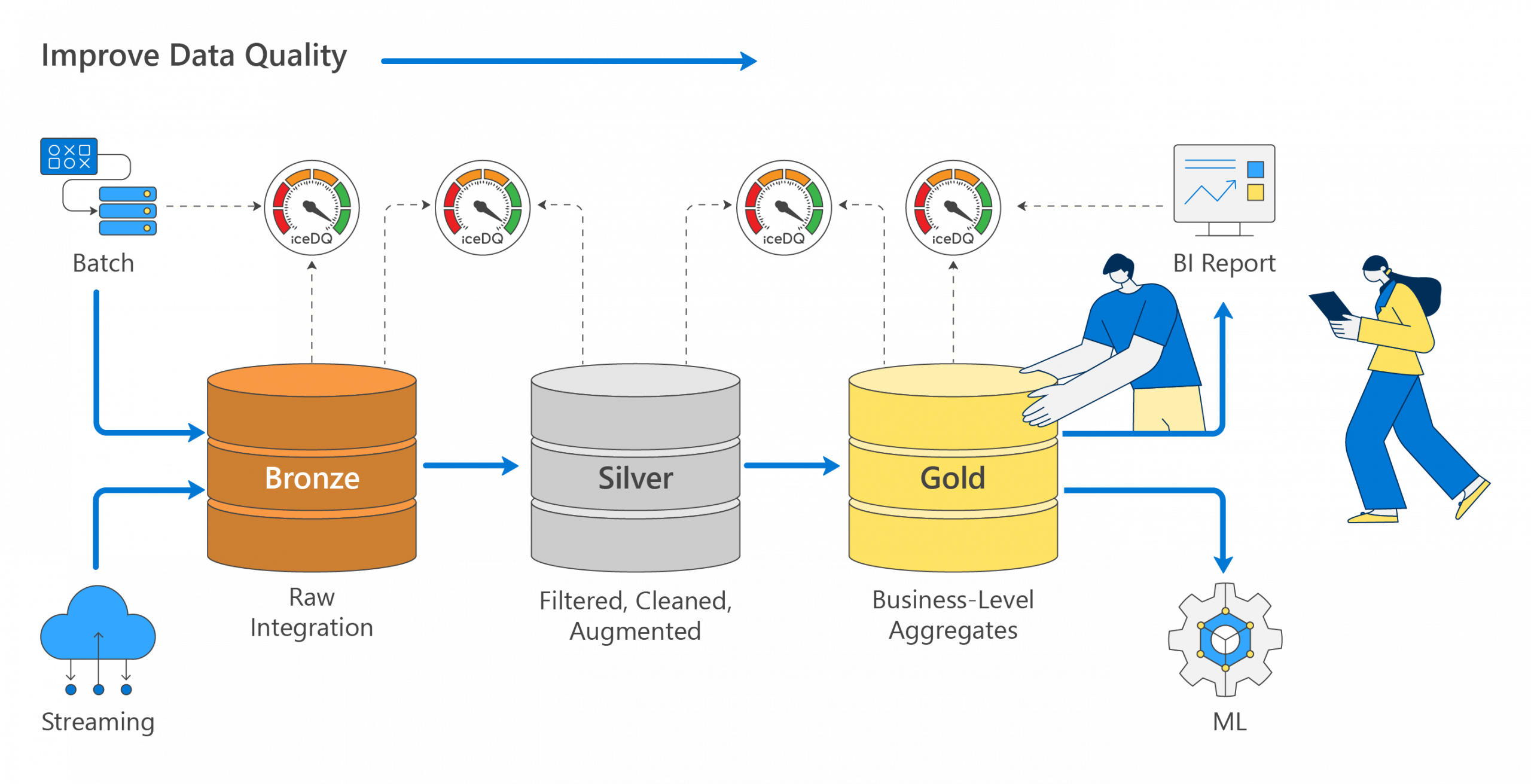

These issues are particularly acute in data lakehouse environments with Medallion architecture, where data flows through Bronze (raw), Silver (cleansed), and Gold (business-ready) layers. Each layer transition represents a potential failure point requiring validation.

Ensuring Data Integrity with iceDQ

iceDQ, the integrated check engine for Data Quality, believes data pipelines should be monitored like factory assembly lines. Just as manufacturing requires continuous quality checks at every production stage, data pipelines require continuous validation at every transformation step.

How iceDQ Validates & Reconcile your Data

Whether you’re running a traditional data warehouse, a data lake, or a modern data lakehouse, iceDQ provides comprehensive data reconciliation:

Source to Target Reconciliation

- Record count validation: Compares row counts between source systems and target tables

- Aggregate reconciliation: Validates that key numeric fields match between systems

- Schema drift detection: Identifies source system changes before they cause corruption

- Freshness monitoring: Ensures data loads complete on schedule with expected volumes

Layer-to-Layer Validation (Medallion Architecture)

- Bronze to Silver: Validates cleansing rules, deduplication, and data type standardization

- Silver to Gold: Ensures aggregations and business calculations are accurate

- Gold to BI Reports: Confirms dashboard numbers match underlying data

Cross-System Reconciliation

- Compare data across data warehouses, data lakes, and lakehouses

- Validate ERP to analytics platform consistency

- Reconcile source systems against reporting outputs

iceDQ Capabilities for Modern Data Platforms

Native Big Data Connectivity

iceDQ’s Big Data Edition provides native connectivity to modern data platforms:

- Apache Spark and Databricks for distributed processing

- Delta Lake, Apache Iceberg, and Apache Hudi table formats

- Cloud warehouses: Snowflake, BigQuery, Redshift, Synapse

- Traditional databases: Oracle, SQL Server, PostgreSQL, MySQL

Automated Monitoring

- Scheduled validation jobs aligned with data pipeline refresh cycles

- Event-driven execution triggered by pipeline completion

- Schema drift detection: Identifies source system changes before they cause corruption

- Integration with orchestration tools: Airflow, Azure Data Factory, AWS Step Functions

- Automated alerting when discrepancies are detected

Comprehensive Reporting

- Data quality dashboards showing health status across your architecture

- Trend analysis to identify recurring issues

- Drill-down from summary metrics to individual failed records

Business Impact: From Data Quality to Business Value

Organizations implementing iceDQ across their data architecture report significant improvements:

| Metric | Without iceDQ | With iceDQ |

| Issue Detection Time | Days or weeks | Minutes after load |

| Root Cause Analysis | Manual investigation | Automated lineage |

| Testing Approach | Ad-hoc SQL scripts | Automated validation |

| Revenue Impact | Unknown leakage | Prevented proactively |

Conclusion

The evolution from data warehouse to data lake to data lakehouse represents significant progress in enterprise data management. Each architecture offers distinct advantages depending on your BI reporting and data science requirements.

But architectural elegance means nothing if the data flowing through your systems cannot be trusted. Whether you’re building a new data lakehouse or maintaining a legacy warehouse, continuous data validation is essential for delivering reliable insights to your business.

iceDQ features an integrated check engine, enabling continuous monitoring to help your data platform consistently deliver precise, comprehensive, and reliable data for all business decisions.

Ready to Ensure Data Quality Across Your Data Platform?

Learn how iceDQ can validate & reconcile data across your data warehouse, data lake, or data lakehouse architecture.

Related Topics: data warehouse vs data lake, data lakehouse architecture, medallion architecture, data quality, big data testing, ETL testing, data reconciliation, Delta Lake, Apache Iceberg, data governance, data pipeline validation

Share this article